Object storage is becoming the de facto standard for data movement and management of long-term storage. Commonly used in cloud storage, popular object storage solutions include Amazon S3, offered by…

The concept of archiving information is not new. On the contrary, derived from the Greek word ‘archeia’, meaning public records, archives have been around for hundreds of centuries, with the…

Cybersecurity drama strikes again as human error leads to China’s biggest data breach and perhaps the most significant hack of personal information in history.

By John Kranz Software Developer, Spectra Logic In “Beyond Measure: The Hidden History of Measurement from Cubits to Quantum Constants,” author James Vincent explores the link between measurement and human…

Welcome to Spectra’s webinar Q&A roundup. In this Q&A blog series we will pick relevant questions from our recent webinars and publish the responses here. Spectra recently hosted a virtual…

Magnetic tape is the lowest cost and longest lasting storage medium available for long-term data preservation. While it is one of the more mature storage mediums on the market, it…

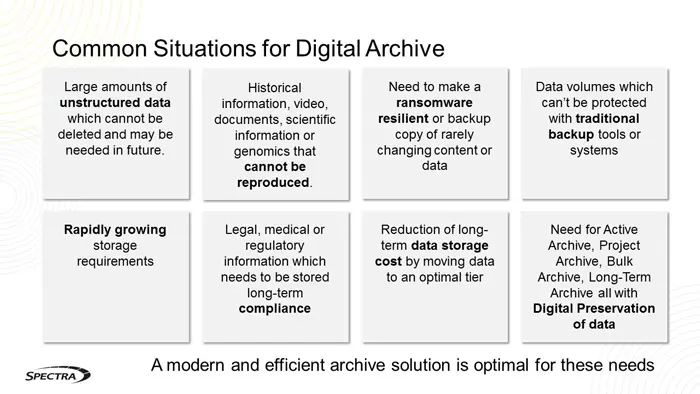

A long-term modern archive solution should substantially lower operational, maintenance, utility, support and administrative costs. It should be simple to use and easy to install, and free current staff from…

Data is growing exponentially and its value is often unpredictable. Data archiving is an essential part of managing data storage throughout its lifecycle. According to TechTarget, data archiving is defined…

There’s a rapid increase in the amount of digital information that is being created, stored and shared in recent years. This explosion of data is driven by a variety of…

“We’ve seen all the cloud has to offer, and tried most of it… The savings promised in reduced complexity never materialized. So we’re making our plans to leave.” These were…